Deep learning in cardiovascular imaging: 4 key takeaways for cardiologists

Deep learning (DL), a subset of artificial intelligence (AI) designed to learn from experience and work like the human nervous system, has already started to transform the practice of healthcare. What does this mean for cardiovascular imaging as time goes on? A team of specialists explored that very question for a new analysis published in JAMA Cardiology.[1]

“As DL systems become more ubiquitous in cardiovascular imaging, clinicians should have a general understanding of the underlying methodology,” wrote lead author Ramsey M. Wehbe, MD, a cardiologist with the Medical University of South Carolina, and colleagues. “Our goal is to clarify how DL models are developed and implemented to accomplish specific tasks in cardiovascular imaging toward demystifying these technologies.”

While the group’s analysis is worth reading in full, these are four key takeaways from their work:

1. What is Deep learning? Reviewing the basics

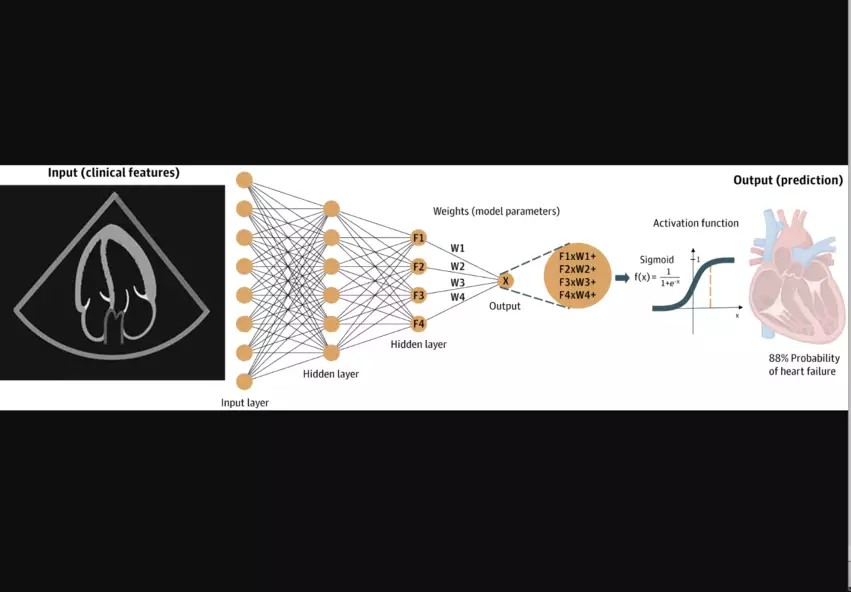

It is not uncommon to see AI and DL discussed as if they are interchangeable terms, but DL is actually a specific kind of AI that can be trained to “learn,” “think” and analyze data in a way that is potentially superior to humans. Feed a DL model enough raw data related to a specific task, and it can “think” like a human and make thoughtful decisions related to that task. DL models are also lightning-fast, meaning they can make these decisions much faster than a human might.

“DL models generate inferences directly from images based on feedback from outside information, allowing a model to be trained on cardiovascular imaging using labels derived from expert consensus, future outcomes or more invasive and costly testing,” the authors explained. “For example, a model can be trained to measure septal wall thickness on echocardiography based on consensus labels from crowdsourced experts, to predict the risk of right ventricular failure after left ventricular assist device implantation directly from echocardiographic clips using post-implant outcomes as labels, or to predict ischemia on stress echocardiography or stress nuclear myocardial perfusion imaging using labels derived from invasive coronary angiography.”

2. Validating deep learning models is crucial

Wehbe et al. emphasized that DL models must be properly validated after they are built if they are going to provide clinicians with any sort of value. Researchers may train a DL model to identify signs of cardiovascular disease using thousands and thousands of medical images from their hospital, for example, but then they would need to test that model’s performance with images from a separate hospital to ensure the model is working as intended.

Skipping this step, the authors wrote, can lead to “falsely optimistic performance estimates.”

3. Examples of deep learning in action

DL is already being used in several ways to boost care for cardiovascular imaging patients—and this is most likely just the very beginning.

Examples of DL in action include:

- Image planning on cardiac MR images

- Guiding novice users through image acquisition

- Reducing noise on CT images

- Improving image reconstruction

- Generating reports on diastolic function based on Doppler images

- Predicting adverse events based on single-photon emission CT stress polar perfusion maps

Wehbe and colleagues did emphasize that the U.S. Food and Drug Administration regulates AI models designed to be used in a clinical setting. Many of the DL models written about and discussed in both cardiology and radiology are still works in progress that are under development.

“Notably, few systems have been evaluated prospectively in a clinical trial setting, and even fewer have been evaluated at multiple clinical sites encompassing a diverse cohort representative of the target population,” the authors wrote. “For an algorithm to be accepted for clinical use, it should be prospectively validated at multiple institutions using imaging data from multiple vendors to ensure the robustness of its predictions.”

4. Many challenges in deep learning remain

When developing and implementing any type of AI model, it can be incredibly difficult to explain the “how.” If a DL model helps determine a patient faces a heightened risk of cardiovascular disease, for example, the physician may wonder how the model reached that conclusion. Explainable DL, the authors explained, is one area that researchers are currently exploring to hopefully address this ongoing issue.

Another big challenge when working with DL is accounting for potential bias.

“Investigators must remain vigilant in ensuring that any algorithm leads to equitable and unprejudiced predictions through careful choices about data curation, algorithm design and model evaluation,” the authors wrote.

On a similar note—and this goes back to the topic of validation once again—some DL models may work well with one data set, but work poorly with another data set. Or models may work at first, but “degrade” over time.

“Data set drift can be combatted via continual learning or model updates,” the authors wrote. “However, there is no substitute for repeated validation of algorithms in external, never-before-seen data sets and continuous auditing of model performance.”

These highlights represent just the tip of the iceberg; click here to read the full analysis in JAMA Cardiology.